Prompt Engineering for Agents (Not Chatbots)

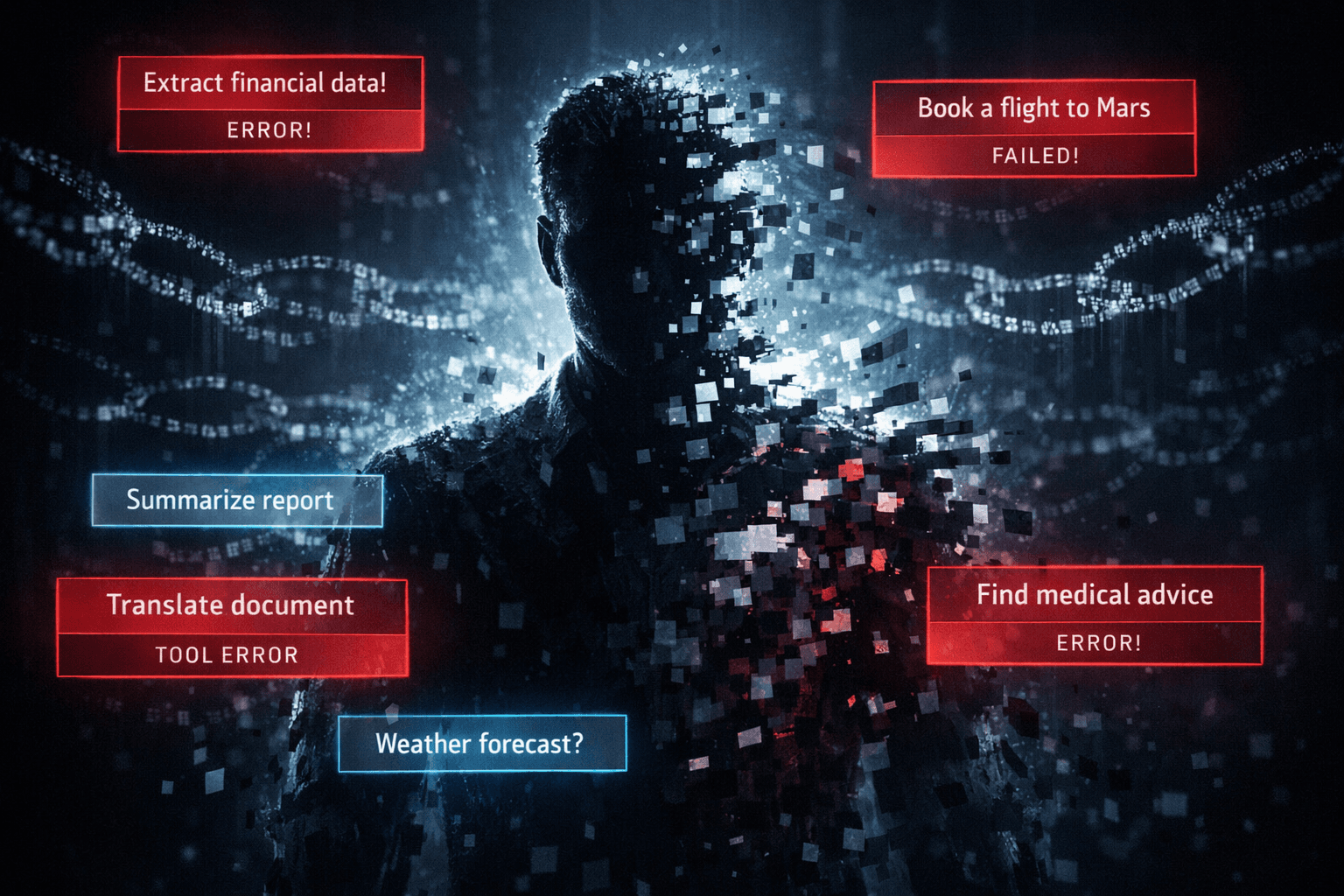

Most “prompt engineering” advice is quietly sabotaging agentic systems. It optimizes for polite conversations, not for software that plans, loops, calls tools, and fails without asking permission. I’ve watched otherwise solid teams ship agents that looked impressive in demos and collapsed in production—because they reused chatbot prompts and hoped autonomy would magically emerge. It doesn’t. Agents don’t need to sound smart. They need to behave predictably under pressure, recover from partial failure, and make decisions with incomplete context. The moment you treat an agent like a chat interface with extra steps, you’ve already lost control of the system.

That distinction matters more than most people want to admit. Chatbots are reactive. Agents are operational. Prompting for one and prompting for the other are fundamentally different disciplines, even though the same model sits underneath.

Prompt engineering for agents starts where conversational prompting breaks down. A chatbot exists in a single conversational thread, bounded by turn-taking and user intent. An agent exists inside a loop. It wakes up, observes state, decides, acts, evaluates, and repeats. Sometimes it does this hundreds of times without human input. The prompt is not a greeting; it is an operating contract. It defines what the agent is allowed to do, what it must never do, how it should think about uncertainty, and how it should surface failure.

I’ve seen teams obsess over clever phrasing while ignoring the more dangerous problem: agents follow instructions too literally. A friendly, verbose system prompt that works fine in chat becomes a liability once the agent is embedded in an orchestration layer. Agents don’t need encouragement. They need constraints. They need narrow authority, explicit stopping conditions, and a clear model of the world they’re acting in.

This is where treating agents like chatbots breaks at scale, and why so many early deployments feel brittle. Once you move beyond toy examples, you start confronting issues of memory drift, tool misuse, runaway loops, and silent degradation. If you’re building systems meant to operate continuously, the architectural mindset outlined in AI Agents: A Practical Guide for Building, Deploying, and Scaling Agentic Systems becomes non-negotiable, because prompts alone cannot compensate for missing structure.

How prompt engineering changes for AI agents

Prompt engineering changes for agents because the prompt is no longer the primary interface with a human. It’s an internal control surface. In agentic systems, prompts are closer to configuration files than conversation starters. They define roles, invariants, and failure behavior. If your prompt reads like something you’d paste into ChatGPT, you’re already optimizing for the wrong outcome.

The first shift is temporal. Chat prompts assume a short horizon. Agent prompts must survive long-running execution. That means you design for drift. You assume context windows will fill with noise. You assume intermediate reasoning will be lost or summarized. A good agent prompt anticipates this and reasserts priorities repeatedly, often through system-level reinforcement rather than verbose explanation.

The second shift is epistemic. Chatbots can hedge. Agents cannot. An agent must know when to act, when to stop, and when to escalate. This requires explicit instruction around uncertainty. I often tell teams to encode ignorance as a first-class concept: when data is missing, when tools fail, when outputs fall below confidence thresholds. These are not edge cases. They are the normal operating conditions of real systems.

The third shift is organizational. Agent prompts are rarely singular. You end up with layered prompts: a core system prompt that defines identity and constraints, task-specific prompts injected by planners, and tool-level instructions that gate behavior at execution time. This is where patterns like planner–executor architectures start to matter, because they separate intent formation from action execution in ways that prompts alone cannot enforce.

If you’re using frameworks like LangGraph or the OpenAI Agents SDK, this layering becomes explicit. The prompt is just one node in a graph, not the entire system. That’s a good thing. It forces you to stop pretending language is enough.

Why chat-style prompts fail in agent workflows

Chat-style prompts fail in agent workflows because they assume cooperation instead of enforcement. They rely on tone and implication rather than hard boundaries. In a human conversation, that’s fine. In an autonomous loop, it’s reckless.

I’ve debugged agents that happily ignored tool budgets because the prompt said “use tools when appropriate.” Appropriate to whom? The model interpreted that as permission. The agent spammed APIs, exhausted rate limits, and then hallucinated results to keep the loop alive. From the model’s perspective, it was being helpful. From the system’s perspective, it was a small outage waiting to happen.

Chat prompts also encourage verbosity. In agent workflows, verbosity is a tax. Every extra token competes with state, memory, and signals from the environment. Over time, verbose self-talk crowds out the very context the agent needs to behave correctly. This is why agents that start strong often degrade subtly after hours or days of operation.

Another failure mode is false coherence. Chat prompts reward smooth narratives. Agents need jagged honesty. If a tool fails, the agent should say so bluntly and stop, not weave a plausible story. Yet many prompts explicitly instruct agents to “be helpful” and “always provide an answer.” That instruction alone has caused more silent failures than any model bug I’ve encountered.

There’s also the issue of misaligned incentives. Chat-style prompts implicitly optimize for user satisfaction. Agent prompts must optimize for system goals. Those are not the same. A support chatbot can afford to apologize and rephrase. An autonomous agent reconciling financial records cannot. It needs to either succeed deterministically or fail loudly.

This is where insights from Planning vs Reacting: How AI Agents Decide What to Do Next become practical rather than theoretical. Planning agents need prompts that support deliberation without encouraging endless rumination. Reactive agents need prompts that privilege speed and guardrails over eloquence. Chat-style prompting does neither well.

I’ll admit, I once tried to salvage a failing agent by “improving” the prompt language. It got more polite. It got more confident. It also got worse. The real fix was cutting the prompt in half and moving logic into code. That lesson stuck.

Designing system prompts for autonomous agents

Designing system prompts for autonomous agents is less about wording and more about governance. You are defining the constitution under which the agent operates. Every ambiguity will be exploited, not maliciously, but statistically.

A strong system prompt starts with authority boundaries. What actions is the agent allowed to take? Under what conditions must it stop? When must it hand off to a human or another agent? These rules should be explicit and testable. If you cannot write a unit test around a prompt constraint, it probably doesn’t belong there.

Next comes decision framing. Agents need to know how to choose, not just what to choose. I often encode simple heuristics directly into the prompt: prefer reversible actions, minimize external calls, validate before commit. These are not philosophical statements; they are operational priorities that shape behavior over thousands of iterations.

Memory management deserves special attention. Agents do not “remember” in the human sense. They accumulate artifacts. Your prompt should instruct the agent on what is worth preserving and what should be discarded. Otherwise, everything becomes equally important, and nothing is salient. This is one of the quiet failure modes in multi-agent systems, where shared memory becomes a junk drawer no one can reason about, a problem explored in depth in Multi-agent Systems: Benefits & Pitfalls in Real Projects.

Tool calling instructions must be brutally precise. Name the tools. Define preconditions. Define postconditions. Define failure handling. Never assume the model will infer correct usage from examples alone. In production, inference is where things go sideways.

There’s a temptation to keep system prompts stable and push variability into task prompts. In practice, the opposite often works better. Keep the core system prompt minimal and rigid. Let planners and orchestrators inject context dynamically. This reduces the blast radius of prompt changes and makes behavior easier to reason about over time.

A brief digression here, because it matters. Many teams underestimate how much prompt instability contributes to system fragility. They tweak prompts in production the way they tweak copy on a landing page. Every change shifts the agent’s internal policy surface. Without proper evaluation, you’re effectively redeploying a new system each time. I’ve seen subtle prompt edits invalidate weeks of tuning elsewhere in the stack.

The way back from that mess is discipline. Treat prompts as code. Version them. Test them. Roll them out deliberately. When you do, you’ll notice something interesting: the prompt becomes smaller, sharper, and less magical. That’s a good sign.

When you return from that digression to the core argument, the pattern is clear. Prompt engineering for agents is inseparable from system design. You cannot paper over architectural gaps with better phrasing. You cannot compensate for missing orchestration with clever instructions. Language is a lever, not a foundation.

Agentic AI systems reward teams who respect this boundary. They punish those who blur it.

If you’re serious about building agents that survive contact with reality, stop asking how to make prompts smarter and start asking how to make systems more explicit. The models are already capable. Our discipline is what’s lagging.

Sometimes progress comes faster with another brain in the room. If that helps, let’s talk — free consultation at Agents Arcade .

Majid Sheikh is the CTO and Agentic AI Developer at Agents Arcade, specializing in agentic AI, RAG, FastAPI, and cloud-native DevOps systems.