Using MCP (Model Context Protocol) to Let LLMs Call Real Tools

I’m going to be honest with you—this article was born out of annoyance. Two nights ago, I was knee-deep in a debugging session, trying to keep one of our older agent stacks alive. Some fragile Python wrapper around an OpenAI function call was silently failing because the schema drifted somewhere between v1 and v2 of an internal API. One tiny naming mismatch. One miserable 2 a.m. chase through logs, JSON parsing errors, and a dog barking outside like it also hated my code. And the entire thing reminded me of every painful integration hack we've been maintaining for the last year.

If you want a broader, structured overview of how modern AI agents plan, reason, and orchestrate workflows — including tool use, planning, and deployment — check out our practical guide to AI agents .

Here’s the thing: most of us built early “agents” on duct tape. We wrapped tools in little helper scripts, invented ad-hoc JSON formats, patched behaviors into system prompts, and then wondered why everything turned into dependency hell. MCP—Model Context Protocol—exists because this nonsense got out of hand. And to be real for a second, if you’re still writing custom adapters for every database, you are wasting your time. MCP isn't a nice-to-have. It’s the only path forward for scalable agentic AI.

Let’s walk through what that actually means—not the polished marketing summary, but a brutally honest, architecture-level take for people who ship real systems.

Why custom glue code is killing your AI agents

If you’ve spent even one quarter maintaining an agent stack, you already know the archeology project that comes with it. You find little pockets of code that were written at 3 a.m. to “temporarily” adapt a model to talk to some obscure internal service. You find JSON-RPC-ish payloads that never quite matched the schema. You find system prompts doing far more heavy lifting than they should, instructing the LLM not just what to do but how to speak to your tool wrapper without breaking.

This is the era I call the “spaghetti adapter” period of agent development. A time when every team built their own glue layer, their own wrapper style, their own serialization tricks, and their own failure modes. The result is a pile of technical debt hidden behind a facade of “it works on my machine,” held together by tribal knowledge and fragile devops pipelines.

Here’s the quiet truth: most of that glue code isn’t adding any real value. It’s just translating between slightly different JSON structures that were never standardized because we were moving too fast.

The real cost isn’t the code. It’s the cascading unpredictability. A single tool rename can break your agent. A new optional field in your DB schema can confuse the LLM and suddenly it stops querying properly. A server returning null instead of {} can throw the whole chain off. And let’s not even get into latency spikes where your Python tool call hangs because the wrapper doesn’t implement proper timeout logic.

MCP exists because we collectively hit the wall.

Anthropic didn't invent MCP to be cute. They invented it because the agent ecosystem was devolving into a thousand incompatible home-grown APIs. The Model Context Protocol is a standardized interface—finally—for how LLMs should interact with tools, resources, and external systems. No more bespoke wrappers. No more unpredictable JSON schemas. No more guessing.

If you’ve been in the trenches, MCP feels less like a new framework and more like the moment we get to stop reinventing the wheel every two weeks.

A short digression: the evolution of AI tools (and why this time is different)

Before jumping deeper, let me indulge in one short digression. Because if you zoom out a bit, you’ll notice that AI tooling as a whole has gone through the same arc that backend frameworks went through twenty years ago.

We started with raw HTTP handlers. Eventually frameworks emerged: Express, Django, Rails. Then came ORMs. Then came specialized interfaces. Each layer standardized what previously required bespoke glue.

LLMs are now hitting that same inflection point. The early generations of tools were basically mashed-up scripts pretending to be structured interfaces. Then function-calling arrived, which helped but created another problem: every vendor implemented it differently. Different schema validation. Different error handling. Different expectations around arguments. Different ideas of what "stateless" meant. We accidentally built a zoo of micro-protocols.

MCP is the industry’s attempt to end the chaos and settle on one unified standard. And unlike previous attempts at standardization, MCP has traction because it solves direct pain. It’s not theoretical. It fixes actual daily misery.

Alright—digression over. Back to real work.

Building scalable agents with Model Context Protocol

Let’s break down what MCP actually gives you—not the abstract spec, but the pieces that matter when you're building agents that clients rely on.

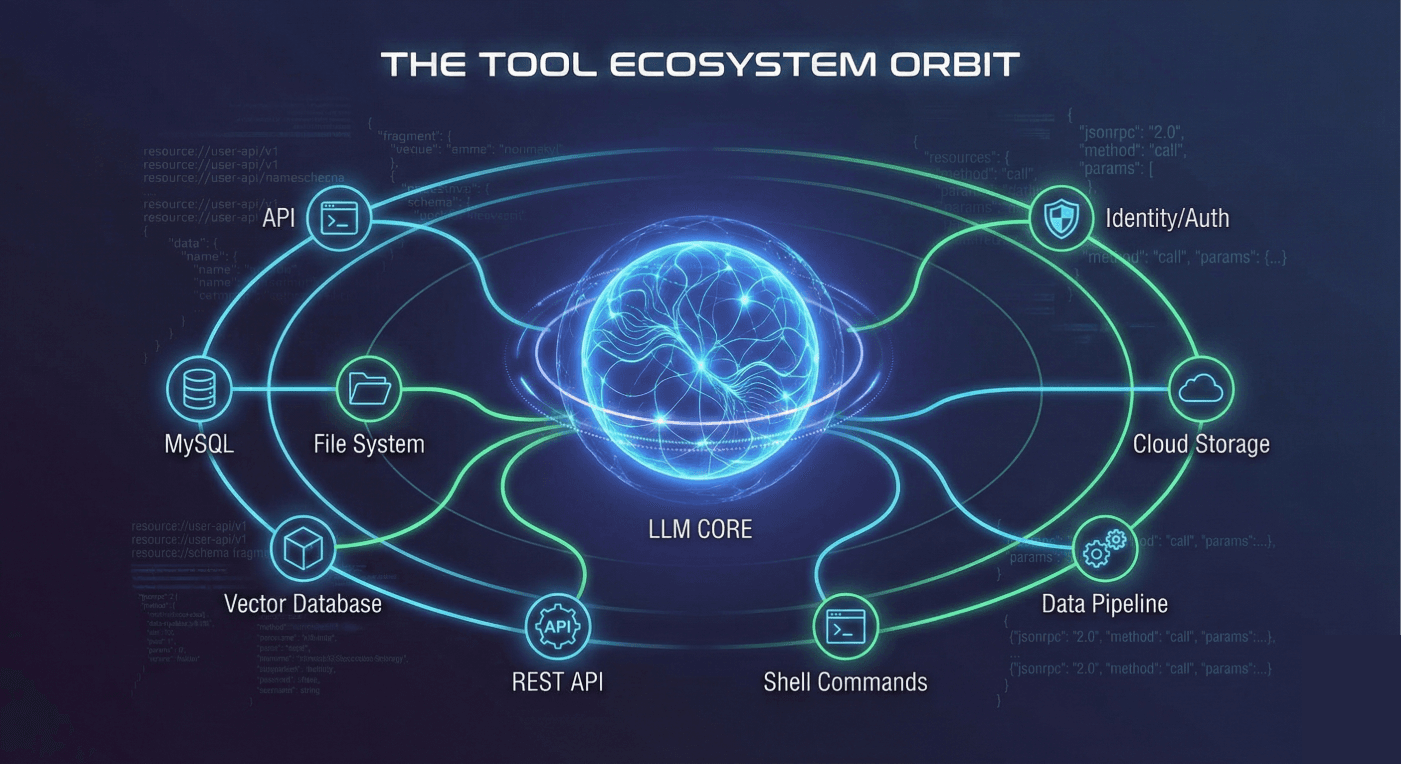

At the core, MCP is a JSON-RPC based communication protocol that defines a clean server-client architecture. The LLM acts as a client. Your tools—databases, APIs, shell commands, vector stores, internal services—act as MCP servers. This flips the mental model. Instead of the LLM needing custom wrappers, your tools expose a standardized interface. The model communicates using defined messages with schema validation baked into the protocol itself. No hacks. No guesswork. No “let’s hope the model formats this right.”

Every MCP server defines:

- Tools

- Resources

- Prompts

- Schemas

And these are not random. They follow strict rules. Your agent becomes predictable because the LLM doesn’t improvise the interface—it consumes it.

This one shift creates scalability. You want fifty tools? Plug them in as MCP servers. You want to swap your local PostgreSQL access with a remote warehouse? Replace the server. You want your agent to run in a stateless environment but still access local data? MCP supports resource URIs with clear access patterns. The architecture is no longer a patchwork. It’s modular.

The biggest practical win I’ve seen is schema stability. With MCP, the schema is not optional. The LLM can only call tools that have clearly defined JSON schemas. If those schemas drift, tools fail—predictably, with errors the LLM can reason about. You stop having phantom failures or partial executions. You get deterministic behavior in a system that traditionally hated determinism.

And to be honest, after years of improvising agent stacks, this feels like fresh air.

The real difference: LLMs stop hallucinating tool calls

One of the most underestimated benefits of MCP is that it eliminates a whole class of hallucinations related to tool calling. When the model doesn’t know the tool interface, it makes assumptions. Sometimes those assumptions are correct. Sometimes they are “surely the DB table is called users even though it’s actually user_accounts_v3.”

Under MCP, the model doesn’t guess. It reads the tool definitions. It understands the arguments. It validates before calling. This shifts tool calling from a generative act to an interpretive one. And that’s what makes agentic AI finally behave like a system, not a clever parrot.

The model becomes a controller orchestrating remote procedure calls. Not a creative writer trying to produce JSON that vaguely resembles your expectations.

Connecting LLMs to local data sources securely

Now we arrive at what I believe is the killer feature for enterprise adoption: local environment access without chaos.

Security teams hate when developers invent random ways for LLMs to access files or databases. They hate ad-hoc wrappers. They hate shell access via prompts. They hate when you tell them “don’t worry, the model won’t do something dangerous if we instruct it nicely.” Because we all know instructions aren't enough.

MCP solves this by isolating all real-world interactions behind clearly defined, permissioned servers. Your LLM never touches the file system directly. It never shells into the machine. It never improvises a command. You expose specific resources—like /data/customers.csv—with explicit allowed operations, and the model interacts only through that interface.

You get predictable local environment access. You get audit logs. You get schema validation. You get a stateless connection where every tool call is controlled.

There’s nothing magical here. It’s simply the first time the AI ecosystem has treated tool access like a proper RPC layer instead of a clever prompt engineering trick.

In our internal deployments at Agents Arcade, this has been transformative. Instead of exposing entire systems, we create tightly-scoped MCP servers for each domain: MySQL, vector search, filesystem, CRM API, internal compliance engine, and so on. The LLM orchestrates between them, but it never escapes the sandbox.

This is how you build real enterprise agents—not the toy demos you see floating around.

Why proprietary ecosystems are the dead end

Some vendors are trying to keep everything inside their walled garden. You write tools their way. You deploy on their platform. You accept their constraints. And it works fine for prototypes, but the moment you need to integrate with a government API running on a 12-year-old server in a locked-down datacenter in Lahore, their “ecosystem” collapses.

Proprietary tool layers have no incentive to support your tech stack. They won’t integrate with your legacy systems. They won’t adapt to your security models. They want you to adapt to them.

MCP is the opposite. It’s a thin standard. A protocol. It doesn’t care if the server is written in Go, Python, Rust, Node, or running on a Raspberry Pi. As long as it speaks JSON-RPC over standard streams, it works. You can implement it in ten lines or three hundred, depending on your needs.

And because it’s vendor-agnostic, you can use it with Anthropic Claude today, with OpenAI tomorrow (they already support the core idea), and with any future model that embraces agentic AI standards.

This is why I keep telling teams: stop tying your systems to proprietary wrappers. MCP is the interoperability layer you actually need.

Real talk: implementing MCP is easier than you think

A lot of developers assume adopting MCP requires some architectural overhaul. Not really. Most of your existing tools already have callable APIs. MCP just forces you to formalize them.

Take any tool you’ve wrapped manually before:

- A database query function

- A file reader

- A local vector store

- A shell command

- An API request handler

Wrap it in an MCP server. Define its schema. Expose resources if needed. The entire architecture becomes cleaner. You get stateless behavior by default. You get auto-discovery of available tools. You get consistent error messages instead of whatever random exceptions Python throws that day.

The biggest surprise for me was how natural the server-client architecture feels. Instead of passing tools into the LLM through a custom agent wrapper, you connect the LLM to MCP servers. It’s like plugging peripherals into a USB port. The LLM just sees what’s available and uses it.

This is how agents should have worked from day one.

For concrete examples of how AI agents are already automating complex tasks in real workflows, see our article on real-world examples of AI agents automating tasks.

The messy middle: debugging before MCP vs after MCP

Let me paint you a picture of the debugging process before MCP.

Your agent tries to call a tool. The JSON is malformed. You get an exception in Python. The wrapper swallows it. The LLM tries again. This time the schema is wrong. You get an empty response. The agent hallucinates a fallback answer. You dig through logs. You compare versions. You guess.

Now with MCP?

The model attempts the tool call. The server rejects it with schema validation. The model corrects itself. If it fails again, you get a precise error code. You fix the schema or the server. Done.

LLMs are surprisingly good at self-correcting when the protocol enforces structure. It’s almost like working with a strict type system after years of untyped chaos. You don’t realize how much time you’ve been wasting until everything just works.

The subtle shift: the system prompt becomes a real system config

One thing I love about MCP is how it changes the role of system prompts. With custom glue code, the system prompt becomes a dumping ground. You throw in instructions like “When calling the database, use snake_case and wrap the query in a JSON object.” It’s ridiculous. The model shouldn't need to read prose to learn your API.

MCP removes that. The system prompt goes back to what it should be: high-level behavior, not implementation details. Your model no longer needs to memorize tool interfaces. It discovers them through the protocol.

This eliminates an entire category of prompt-induced bugs. It also makes your agent portable. Swap tools, swap servers, but keep your system prompt exactly the same. This gives you architecture-level modularity that used to require a mountain of abstraction layers.

How MCP fits into the agentic AI standards era

There’s a bigger picture here. Agentic AI is transitioning from clever engineering hacks to real systems. Just like microservices needed Docker and Kubernetes to mature, multi-tool AI agents need MCP or something equivalent. The industry is clearly moving toward common standards:

- Socket-like interfaces for local tools

- Declarative schema-driven validation

- Stateless RPC-based architecture

- Model-discoverable resources

- Vendor-agnostic interoperability

If you're serious about agentic workflows—real workflows, not demos—you need a way for LLMs to call tools that won't break every week. MCP is the first protocol that acknowledges the complexity of real integrations.

The days of “just put it in the system prompt” are over.

If you’re looking to implement MCP in production, our guide on building LLM apps with FastAPI — best practices walks through practical patterns for structuring endpoints, tool orchestration, and scaling workflows.

Let’s talk about performance (because someone will ask)

Yes, MCP adds overhead. JSON-RPC is not as fast as direct local calls. The stateless architecture means each interaction contains slightly more metadata. But here's the catch: the reliability it brings far outweighs the micro-latency cost.

When you're dealing with LLM latencies measured in hundreds of milliseconds—or more—the overhead of a structured RPC layer is insignificant. And more importantly, the protocol allows smarter batching, tool call planning, and structured multi-step workflows.

Performance matters. But predictability matters more. MCP gives you both.

Where MCP will be in 12 months

This is the part where I make a prediction based on three decades of engineering work: within a year, every serious AI platform will either support MCP or fail to attract developers building real agents.

Early adopters already see the benefits. Enterprise teams love standards. Security teams love enforceable schemas. Backend engineers love predictability. Architects love modularity. This won’t be optional. The momentum is too strong.

We will eventually see:

- Shared repositories of MCP tools

- Standardized connectors for databases, CRMs, and cloud services

- MCP-native agent frameworks

- LLMs trained specifically for MCP-style tool planning

Agents will become ecosystems, not monoliths. And MCP is the protocol gluing the ecosystem together.

Final words from someone who's been through the trenches

I’ve built enough AI systems to know when something is going to quietly fail at scale. Custom glue code, bespoke wrappers, handwritten JSON schemas—they will bury your agent project. They already have for many teams.

MCP is the first realistic escape route. It’s not hype. It’s not a framework-of-the-month. It’s a proper protocol solving a real problem.

And if you take one thing away from this entire article, let it be this:

Stop building brittle tool layers. Start building MCP servers. Your future self will thank you.

If you’re done wrestling with fragile integrations and want agents that actually work, let’s talk. Visit Agents Arcade for a consultation.

Majid Sheikh is the CTO and Agentic AI Developer at Agents Arcade, specializing in agentic AI, RAG, FastAPI, and cloud-native DevOps systems.