Building LLM apps with FastAPI — best practices

I’ll start with a prediction, because it’s already half true. Most “LLM-powered APIs” shipping today will be rewritten within twelve months, not because the models changed, but because the backend architecture couldn’t survive contact with real users. Latency spikes, token bills explode, prompts drift, and suddenly the shiny demo endpoint becomes the most fragile part of the system.

If you want a broader foundation on how modern AI agents plan, reason, and orchestrate workflows (beyond just backend architecture), check out our practical guide to AI agents . It covers agent workflows, planning strategies, tool calling patterns, and production-ready design.

I’ve been building APIs long enough to remember when FastAPI itself was considered “cute but risky.” Today it’s the default choice for serious AI backends, and for good reason. But here’s the uncomfortable truth: FastAPI doesn’t save you from bad LLM design. It just makes it faster to build the wrong thing.

If you’re wiring OpenAI API calls into a /chat endpoint and calling it a day, you’re not building an LLM application. You’re building a latency amplifier with a memory leak disguised as intelligence.

Here’s the thing. LLM apps are not special because they use large language models. They’re special because they mix probabilistic systems with deterministic infrastructure, and FastAPI sits right at that fault line. If you don’t respect that, you’ll feel it in production.

The real problem with most FastAPI LLM backends

Most teams start with the same mental model: request comes in, prompt goes out, response comes back. It looks like any other API call, so they treat it like one. Synchronous endpoint, JSON in, JSON out. Maybe a bit of async sprinkled on top to feel modern.

That model collapses the moment you add real constraints. Token limits, streaming responses, retries, tool calling, background tasks, vector searches, user-specific context, and rate limiting all collide in the same request lifecycle. FastAPI will happily let you do all of this in one endpoint, but that doesn’t mean you should.

To be blunt, naive chat endpoints fail because they conflate orchestration with transport. FastAPI is excellent at HTTP. It is not your agent runtime, your prompt registry, or your state machine. When people complain that “LLM apps don’t scale,” what they usually mean is that they built orchestration logic directly into request handlers and now can’t reason about it.

This is where experience starts to matter.

Best practices for LLM apps with FastAPI

The first hard-earned rule is separation, and not the textbook kind. Your FastAPI layer should be boring. Painfully boring. It should validate input, authenticate users, enforce rate limiting, and hand off work to something else. The moment your endpoint starts deciding which prompt to use or which tool to call, you’re already on thin ice.

I’ve seen teams spend weeks tuning async Python performance when the real issue was that every request was rebuilding prompts from scratch, fetching embeddings synchronously, and calling the OpenAI API with zero caching. FastAPI wasn’t slow. The design was.

You want thin endpoints and thick services. The “service” here is not a class with a couple of methods. It’s a clearly defined execution unit that can be tested outside of HTTP entirely. When you do this properly, FastAPI becomes replaceable. That’s a good sign.

Another non-negotiable practice is explicit prompt versioning. Not comments in code. Not Git commit messages. Real, first-class prompt versions that your FastAPI app can select at runtime. LLM behavior is part of your API contract, whether you like it or not. If you can’t roll back a prompt without redeploying, you’re running a science experiment, not a production system.

And yes, this all feels heavy at the beginning. It’s supposed to. Production AI is heavier than demos. That’s the price of reliability.

FastAPI architecture for AI applications

Let’s talk architecture, because this is where most strong backend engineers initially underestimate LLM complexity.

A solid FastAPI architecture for AI applications treats the model as an unreliable collaborator, not a function. That mindset shift changes everything. You stop assuming deterministic outputs. You stop assuming single-step execution. You start designing for retries, partial failures, and timeouts as first-class concerns.

In practice, this means your FastAPI app should not “do the work.” It should coordinate work. Your request handler triggers an execution flow that may involve retrieval, tool calling, multiple model invocations, and post-processing. Some of that belongs in background tasks. Some of it belongs in workers. Almost none of it belongs inline.

Async Python helps, but it’s not magic. Async lets you overlap I/O, not make slow operations fast. If you’re waiting on an OpenAI API call, async just means you can wait politely. If your latency budget is blown, you need architectural changes, not syntax changes.

One pattern I keep coming back to is treating LLM executions as jobs with traceable lifecycles. Even if the user gets a synchronous response, internally you track execution IDs, intermediate steps, and model decisions. When something goes wrong, and it will, this is the difference between “the model hallucinated” and “the retrieval step returned an empty context and the fallback prompt triggered.”

FastAPI integrates nicely with this approach because dependency injection gives you clean seams. Use them. Don’t pass clients and configs around like global state. Inject them deliberately, and log aggressively.

Why async Python alone won’t save you

There’s an almost religious belief in some circles that async Python is the solution to scaling LLM APIs. I get where it comes from. FastAPI made async approachable, and LLM calls are I/O-heavy. On paper, it makes sense.

In reality, async only solves one layer of the problem. If your prompts are bloated, your token limits are ignored, and your RAG pipelines are doing full-table scans on every request, async just lets you fail faster.

Latency optimization in LLM systems starts before the API call. It starts with prompt discipline. Shorter system prompts. Structured outputs. Aggressive context pruning. Caching embeddings and intermediate results. These are architectural decisions, not concurrency tricks.

FastAPI gives you background tasks, and they’re criminally underused. Not every user-facing request needs the full answer immediately. Follow-up summarization, logging, analytics, even some tool calls can happen after the response is returned. This one decision can cut perceived latency in half without touching async at all.

To be honest, the teams that win are the ones who obsess over where time is actually spent, not the ones chasing theoretical throughput numbers.

scaling LLM APIs in production

Scaling LLM APIs in production is where theory meets invoices. You can’t talk about scale without talking about cost, and you can’t talk about cost without talking about control.

The first scaling wall is rate limiting, and not just at the HTTP layer. You need user-level limits, org-level limits, and model-level limits. FastAPI can enforce request limits easily, but token-based limits require deeper integration. If you don’t track token usage per request, you’re flying blind.

Then comes horizontal scaling. Stateless FastAPI apps are easy to scale. Stateful LLM workflows are not. If your application relies on in-memory conversation state, you’ve already limited your scaling options. Externalize state early. Redis is boring and reliable for a reason.

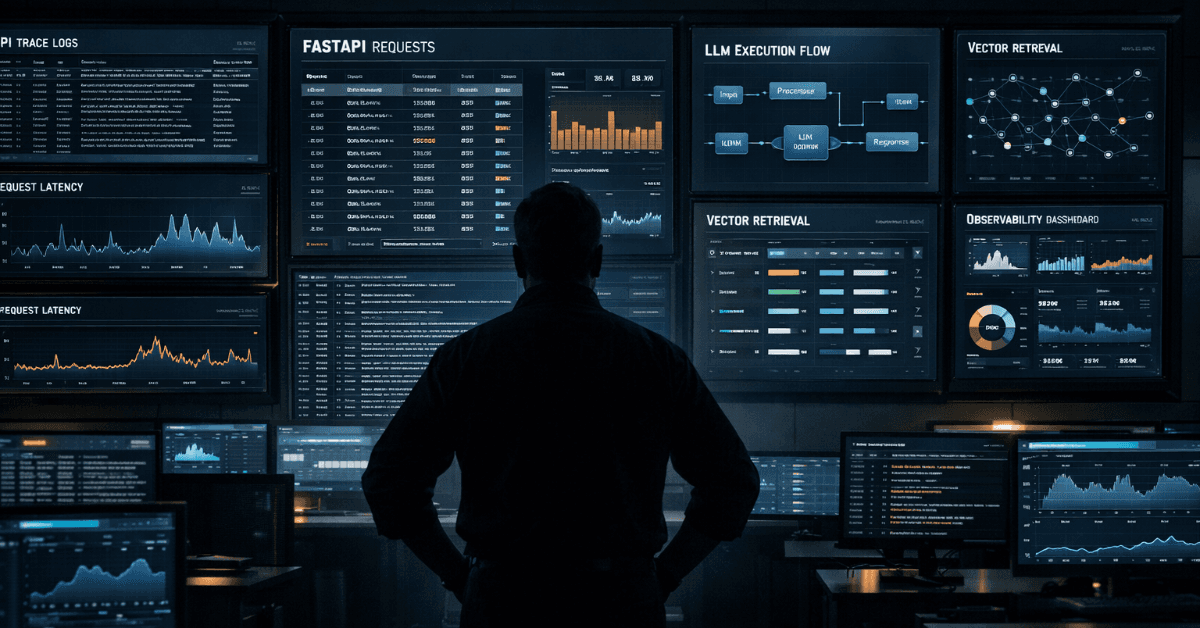

The second wall is observability. Traditional metrics won’t tell you why an LLM response was bad. You need traces that include prompts, model parameters, tool calls, and responses. Yes, that’s sensitive data. Handle it responsibly. But without it, you’re debugging vibes.

I’ve seen CTOs try to scale by switching models, hoping a cheaper OpenAI API tier will fix everything. It never does. The real savings come from reducing unnecessary calls, batching where possible, and rethinking workflows so the model is used only where it adds value.

Here’s a digression that always circles back. People talk about “AI-first architecture.” I think that’s backwards. You want architecture-first AI. Strong boundaries, explicit contracts, and ruthless simplicity. Once that’s in place, models become interchangeable. Without it, every upgrade is a gamble.

Tool calling is an architectural decision, not a feature

Tool calling is powerful, but it’s also a trap. The moment your LLM can call tools, it becomes part of your control plane. That means failures aren’t just possible, they’re expected.

In FastAPI-based systems, tools should look like internal APIs, not helper functions. They should be authenticated, validated, and observable. When the model calls a tool, you should know exactly what happened, how long it took, and whether it succeeded.

One mistake I see often is letting the model decide too much. Tool selection, input shaping, and error handling all delegated to the LLM. That works until it doesn’t, and when it fails, it fails creatively.

A more resilient approach is constrained tool calling. The model proposes actions. Your system validates and executes them. FastAPI’s dependency system makes this cleaner than people realize, especially when combined with schema validation.

RAG pipelines and the myth of “just add a vector DB”

Retrieval-augmented generation is sold as a bolt-on feature. Add a vector database, embed your docs, and you’re done. Anyone who has run this in production knows that’s fantasy.

RAG pipelines fail silently. Empty retrievals, stale embeddings, and irrelevant context all degrade output quality without throwing errors. Your FastAPI app needs to treat retrieval as a critical dependency, not a helper.

This means explicit timeouts, fallbacks, and observability around retrieval. If retrieval fails, the model should know it failed. That should be reflected in the prompt, not hidden. Transparency improves output quality more than clever prompt hacks.

Token limits also matter here. Stuffing everything into context is lazy and expensive. Good RAG systems summarize, rank, and prune aggressively. FastAPI doesn’t care how you do it, but your users will care when latency creeps up.

Prompt versioning and controlled evolution

Prompt versioning deserves its own emphasis because it’s one of the few levers you fully control. Models change. APIs evolve. Prompts are where your domain knowledge lives.

Treat prompts like code. Version them. Test them. Roll them back. Your FastAPI app should be able to select prompt versions based on environment, user segment, or experiment flags. If that sounds like overkill, wait until a minor prompt tweak breaks a key customer workflow.

I’ve lived through that incident. It’s not fun. It is, however, avoidable.

Observability is not optional

If you’re running LLM apps without deep observability, you’re guessing. Logs alone are not enough. You need structured traces that connect HTTP requests to model calls to tool executions.

FastAPI integrates well with modern observability stacks, but you have to be intentional. Log prompts responsibly. Mask sensitive data. Capture latency at every step. When users complain, you should be able to answer “why” with evidence, not intuition.

This is where experienced DevOps engineers shine. AI systems don’t replace operational discipline. They amplify the consequences of lacking it.

The uncomfortable conclusion

Building LLM apps with FastAPI is not about frameworks or models. It’s about discipline. FastAPI gives you speed and flexibility. LLMs give you leverage. Neither forgives sloppy design.

If there’s one assumption I’ll challenge directly, it’s the idea that AI makes backend development easier. It doesn’t. It makes it less forgiving. The margin for architectural mistakes shrinks, because the system is probabilistic by default.

The upside is real. When done well, these systems are powerful, adaptable, and genuinely useful. When done poorly, they’re expensive toys.

I’m still optimistic, despite the scars. We’re getting better at this. The patterns are emerging. The tools are maturing. But the teams that succeed are the ones treating LLM apps as serious backend systems, not clever demos with an API wrapper.

If you’re done wrestling with this yourself, let’s talk. Book a consultation.

Majid Sheikh is the CTO and Agentic AI Developer at Agents Arcade, specializing in agentic AI, RAG, FastAPI, and cloud-native DevOps systems.