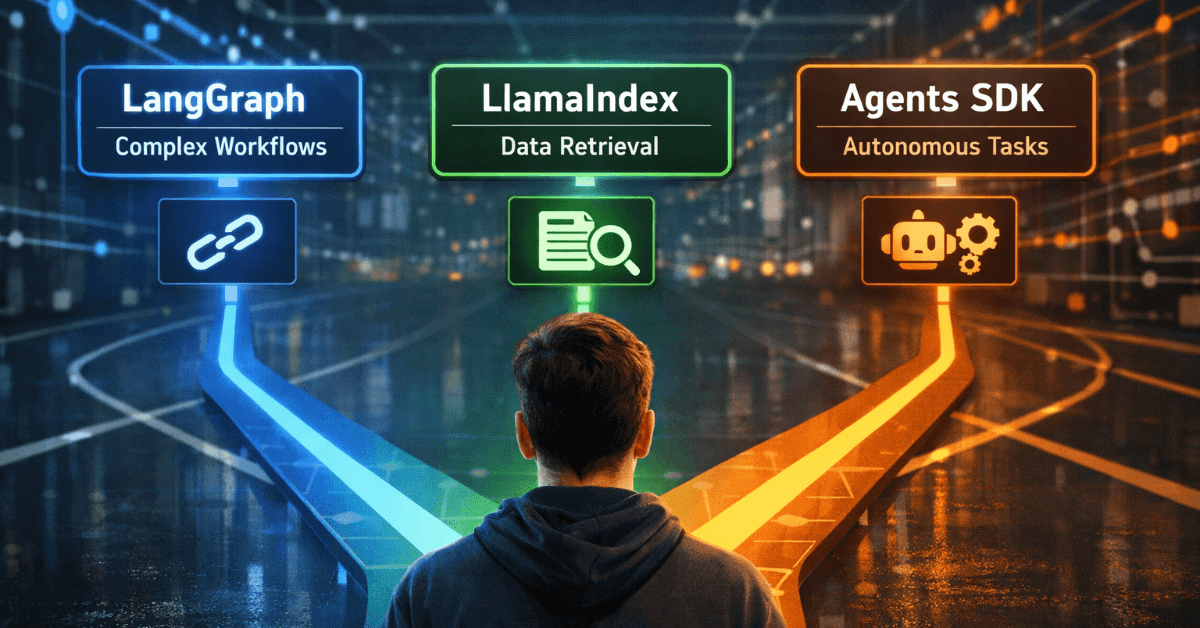

LangChain vs LlamaIndex vs Agents SDK — when to use what

A contrarian opening

Here’s my unpopular opinion to start with: most teams arguing about LangChain versus LlamaIndex versus the OpenAI Agents SDK don’t actually have an “agent” problem. They have a system design problem, and frameworks are just the proxy war. I’ve watched senior engineers burn months wiring abstractions they didn’t need, while junior teams shipped quietly with boring, well-scoped pipelines. The frameworks aren’t the villains. Misuse is.

While LangChain, LlamaIndex, and OpenAI Agents SDK cover most agentic workflow needs, some teams prefer LangGraph for its architecture and orchestration features. You can read our LangGraph vs OpenAI Agents SDK comparison to see how these two frameworks differ in planning, tool integration, and production readiness.

Choosing the right framework isn’t just about features — it’s about architecture, responsibility boundaries, and production readiness. For a broader foundation on how modern AI agents plan, reason, choose tools, and execute workflows in real systems, see our practical guide to AI agents .

I’m Majid Sheikh, Generative AI engineer at Agents Arcade. I’ve been building production systems long enough to be tired of hype cycles but still optimistic enough to believe this stack is genuinely transformative when used correctly. I’ve shipped LLM systems that survived real traffic, real latency constraints, and real customers who don’t care about your architecture diagrams. So let me be blunt and practical.

This isn’t a neutral comparison. Neutrality is for documentation. This is about when each of these tools actually earns its place in a production AI system, and when it becomes an expensive distraction.

The real question people avoid

Most discussions frame this as a feature comparison. That’s already a mistake. The real question is not “what can this framework do?” but “what architectural responsibility am I outsourcing, and what am I locking myself into?” LLM systems fail in production for three boring reasons: latency explodes, behavior becomes un-debuggable, or ownership gets blurry when something breaks at 2 a.m.

Every framework here makes a strong opinionated bet about where intelligence should live. Understanding those bets matters more than memorizing APIs.

LangChain is not an agent framework, and that’s fine

LangChain’s biggest strength is also the reason it gets abused. It gives you composable primitives that feel like Lego blocks for LLM orchestration. Chains, tools, memory, callbacks. It feels empowering. It also tempts people into building sprawling graphs where no single component is truly accountable.

LangChain shines when you need glue. Not intelligence. Glue.

If your system needs to orchestrate prompts, call tools, stream tokens, log traces, and integrate with half a dozen services inside a FastAPI backend, LangChain is a pragmatic choice. It is not elegant, but it is flexible. That flexibility is precisely why senior engineers can tame it and junior teams often drown in it.

when to use LangChain in production

You use LangChain in production when you already understand your system boundaries and you want a thin orchestration layer, not a decision-making brain. LangChain works best when the control flow lives in your codebase, not inside the framework. The moment you start encoding business logic into chains, you’ve crossed the line.

I’ve seen LangChain succeed in customer support systems where the LLM is clearly a subroutine, not the system. The request comes in, your service decides what needs to happen, LangChain executes a predictable sequence, and the result goes back out. Observability matters here, and LangChain’s callback system helps, even if it feels bolted on.

Where LangChain struggles is long-lived autonomy. The more you lean on memory, dynamic routing, or agent loops, the more brittle it becomes. Debugging emergent behavior across chains is a slow, morale-draining experience. Let me be blunt: if your architecture diagram looks like spaghetti, LangChain didn’t do that. You did.

LlamaIndex is a data system pretending to be a framework

LlamaIndex gets mischaracterized as “just another LLM framework.” That’s lazy thinking. LlamaIndex is a data orchestration system first. Its worldview is simple and, frankly, correct: most useful LLM applications live or die on retrieval quality, not prompt cleverness.

If LangChain is about flow, LlamaIndex is about grounding. Indexing, chunking, embeddings, metadata, retrieval strategies. This is where real systems either shine or collapse into hallucination factories.

The moment your product depends on internal knowledge, documents, policies, or structured domain data, LlamaIndex starts to look less optional and more inevitable.

LlamaIndex for RAG applications

This is where I stop hedging. If you are building a serious RAG system and you are not deeply opinionated about ingestion, indexing, and retrieval, you are building on sand. LlamaIndex earns its keep here because it treats retrieval as a first-class concern, not a plugin.

I’ve used it in production for systems where retrieval accuracy mattered more than model choice. Multi-tenant knowledge bases, per-customer document isolation, hybrid search, metadata filtering. These are not edge cases. They are the job.

What LlamaIndex does well is force you to think about data lifecycle. How documents change. How embeddings age. How retrieval impacts latency. It integrates cleanly with vector databases without pretending they’re interchangeable. That honesty matters.

Where people go wrong is trying to make LlamaIndex the entire application. It’s not. It shouldn’t own your API layer, your auth, or your business logic. Treat it as a specialized subsystem, and it will reward you. Treat it like a magic brain, and you’ll fight it.

The Agents SDK is opinionated by design, and that’s the point

The OpenAI Agents SDK is the newest entrant, and it makes the strongest claim: that agentic workflows should be explicit, structured, and observable. This is not a coincidence. It’s a reaction to years of chaotic “agent” demos that worked once and failed silently forever after.

The SDK assumes that agents are not just chatbots with tools. They are workflows with state, boundaries, and evaluation hooks. That assumption alone disqualifies it for a lot of casual use cases. And that’s a good thing.

This SDK is not trying to be everything. It is trying to be correct.

OpenAI Agents SDK for agentic workflows

You reach for the Agents SDK when autonomy is the product, not a side effect. Multi-step reasoning, tool calling with consequences, handoffs between roles, critique loops. These are systems where implicit control flow becomes dangerous.

In production, agentic workflows fail in two ways. Either they become uncontrollable, or they become impossible to audit. The SDK directly addresses both. It enforces structure where LangChain allows chaos. It makes evaluation a first-class citizen instead of an afterthought.

I’ve found it particularly effective in systems where agents operate on real resources. Databases, APIs, external services. When mistakes cost money or trust, you want guardrails, not cleverness.

The tradeoff is lock-in. The SDK assumes OpenAI models, OpenAI tooling, and OpenAI’s mental model of agents. If that makes you uncomfortable, it should. But pretending vendor neutrality is always the highest virtue is naïve. Sometimes the cost of abstraction is higher than the cost of commitment.

A necessary digression on “framework lock-in”

Here’s where people get philosophical instead of practical. Framework lock-in is only a problem if your system is fragile. A well-designed architecture can swap components over time. A poorly designed one is locked in from day one, regardless of framework.

I’ve migrated systems off proprietary tools before. It wasn’t fun, but it was possible because the boundaries were clear. The teams that couldn’t migrate weren’t victims of lock-in. They were victims of unclear ownership and blurred responsibilities.

Choose frameworks that align with your system’s center of gravity. If retrieval is core, LlamaIndex belongs. If orchestration is glue, LangChain fits. If autonomous workflows are the business, the Agents SDK earns its cost.

Latency, the silent killer

None of these frameworks save you from latency. In fact, they often hide it until it’s too late. Production AI systems live and die by response time. Users forgive wrong answers faster than slow ones.

LangChain pipelines can quietly stack calls. LlamaIndex retrieval can balloon if your indexing strategy is sloppy. Agent loops can spiral if you don’t cap reasoning steps. The frameworks won’t stop you. Experience will.

This is why I’m skeptical of teams adopting multiple frameworks at once. Every abstraction adds overhead. Every layer complicates tracing. Pick one primary responsibility per framework, and be ruthless about it.

Evaluation is not optional anymore

One reason the Agents SDK feels heavy is because it assumes evaluation from day one. That’s not academic. That’s survival. If you cannot measure behavior, you cannot improve it. LangChain and LlamaIndex can be evaluated, but they don’t force the issue.

In real systems, regression comes from subtle changes. Prompt tweaks. Model upgrades. New documents. Without evaluation pipelines, you’re flying blind. I’ve watched teams celebrate upgrades that quietly degraded output quality for weeks.

The future of applied LLM systems belongs to teams who treat evaluation as infrastructure, not ceremony.

So, when should you actually choose each?

If your backend already has strong opinions and you need LLMs as components, LangChain is serviceable and mature. Keep it on a leash.

If your application’s value is grounded knowledge and retrieval accuracy, LlamaIndex is not optional. Build around it, not on top of it.

If you are building true agentic workflows where autonomy, safety, and observability matter, the OpenAI Agents SDK is the most honest tool on the table right now. Accept the tradeoffs consciously.

The mistake is thinking one of these will save you from architectural thinking. None will.

The closing reality check

Framework debates are comfortable because they feel technical. The hard work is defining boundaries, ownership, and failure modes. That work doesn’t trend on social media, but it’s what keeps systems alive.

I’m optimistic because the tooling is finally catching up to production reality. I’m world-weary because I’ve seen too many teams repeat the same mistakes with better APIs. And I’m practical because, at the end of the day, customers don’t care what framework you used. They care that it works.

If you’re done wrestling with this yourself, let’s talk. Visit Agents Arcade for a consultation.

Majid Sheikh is the CTO and Agentic AI Developer at Agents Arcade, specializing in agentic AI, RAG, FastAPI, and cloud-native DevOps systems.